| (未显示同一用户的4个中间版本) | |||

| 第86行: | 第86行: | ||

[[Image:8_H3_1file.png|600px|border]] | [[Image:8_H3_1file.png|600px|border]] | ||

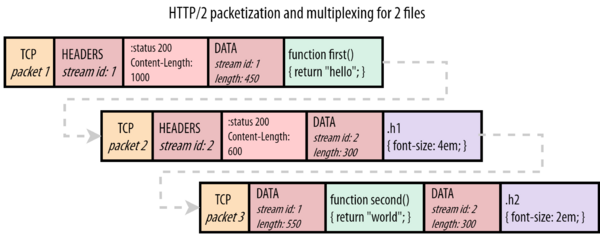

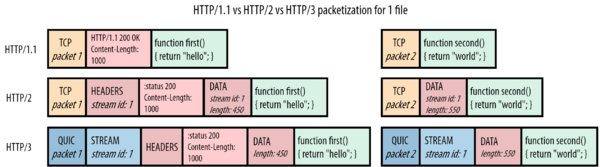

QUIC was inspired by HTTP/2’s framing-approach and also adds its own frames; in this case the STREAM frame. The stream id, which was previously in HTTP/2’s DATA frame, is now moved down to the Transport Layer in QUIC’s STREAM frame. | |||

[[Image:9_H3_bytetracking.png|600px|border]] | |||

[[Image:9_H3_bytetracking|600px|border]] | |||

[[Category:Network]] | [[Category:Network]] | ||

2023年12月18日 (一) 09:28的最新版本

Head-of-line blocking (HOL blocking) in computer networking is a performance-limiting phenomenon that occurs when a line of packets is held up in a queue by a first packet. Solving HOL blocking was also one of the main motivations behind not just HTTP/3 and QUIC but also HTTP/2.

The HOL blocking problem

It’s difficult to give you a single technical definition of HOL blocking, as this blogpost alone describes four different variations of it. A simple definition however would be:

When a single (slow) object prevents other/following objects from making progress

A good real-life metaphor is a grocery store with just a single check-out counter. One customer buying a lot of items can end up delaying everyone behind them, as customers are served in a First In, First Out manner. Another example is a highway with just a single lane. One car crash on this road can end up jamming the entire passage for a long time. As such, even a single issue at the “head” can “block” the entire “line”[1].

This concept has been one of the hardest Web performance problems to solve.

HOL blocking in HTTP/1.1

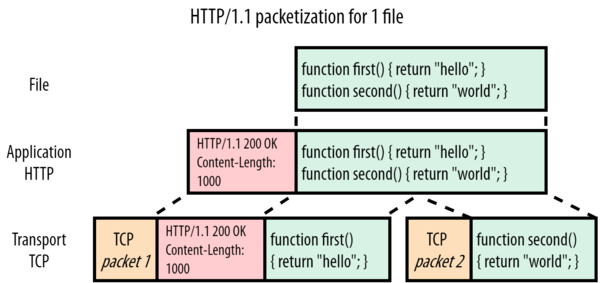

HTTP/1.1 is a protocol from a simpler time. A time when protocols could still be text-based and readable on the wire. This is illustrated in Figure 1 below:

We can see that the HTTP aspect itself is straightforward: it just adds some textual “headers” (red) directly in front of the plaintext file content or “payload”. Headers + payload are then passed down to the underlying TCP (orange) for actual transport to the client. For this example, let’s pretend we cannot fit the entire file into 1 TCP packet and it has to be split up into two parts.

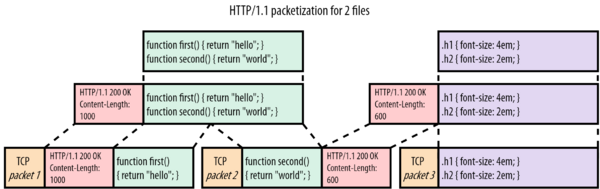

Now let’s see what happens when the browser also requests style.css in Figure 2:

In this case, we are sending style.css (purple) after the response for script.js has been transmitted. The headers and content for style.css are simply appended after the JavaScript (JS) file. The receiver uses the Content-Length header to know where each response ends and another starts (in our simplified example, script.js is 1000 bytes large, while style.css is just 600 bytes).

All of that seems sensible enough in this simple example with two small files. However, imagine a scenario in which the JS file is much larger than CSS (say 1MB instead of 1KB). In this case, the CSS would have to wait before the entire JS file was downloaded, even though it is much smaller and thus could be parsed/used earlier. Visualizing this more directly, using the number 1 for large_script.js and 2 for style.css, we would get something like this:

11111111111111111111111111111111111111122

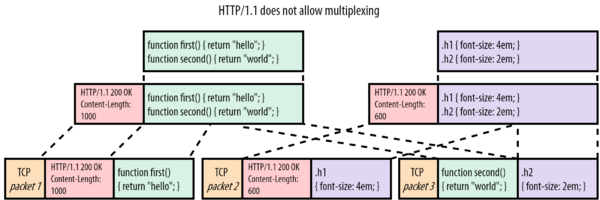

HTTP/1.1 does not allow multiplexing

The “real” solution to this problem would be to employ multiplexing. If we can cut up each file’s payload into smaller pieces or “chunks”, we can mix or “interleave” those chunks on the wire: send a chunk for the JS, one for the CSS, then another for the JS again, etc. until the files are downloaded. With this approach, the smaller CSS file will be downloaded (and usable) much earlier, while only delaying the larger JS file by a bit. Visualized with numbers we would get:

12121111111111111111111111111111111111111

The main problem here is that HTTP/1.1 is a purely textual protocol that only appends headers to the front of the payload. It does nothing further to differentiate individual (chunks of) resources from one another.

This is a fundamental limitation of the way the HTTP/1.1 protocol was designed. If you have a single HTTP/1.1 connection, resource responses always have to be delivered in-full before you can switch to sending a new resource. This can lead to severe HOL blocking issues if earlier resources are slow to create (for example a dynamically generated index.html that is filled from database queries) or, as above, if earlier resources are large.

HOL blocking in HTTP/2

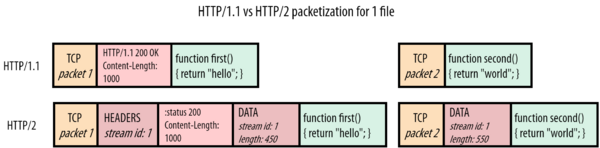

As such, the goal for HTTP/2 was quite clear: make it so that we can move back to a single TCP connection by solving the HOL blocking problem. Stated differently: we want to enable proper multiplexing of resource chunks. This wasn’t possible in HTTP/1.1 because there was no way to discern to which resource a chunk belongs, or where it ends and another begins. HTTP/2 solves this quite elegantly by prepending small control messages, called frames, before the resource chunks.

HTTP/2 puts a so-called DATA frame in front of each chunk. These DATA frames mainly contain two critical pieces of metadata. First: which resource the following chunk belongs to. Each resource’s “bytestream” is assigned a unique number, the stream id. Second: how large the following chunk is. The protocol has many other frame types as well, of which Figure 5 also shows the HEADERS frame. This again uses the stream id to indicate which response these headers belong to, so that headers can even be split up from their actual response data.

Using these frames, it follows that HTTP/2 indeed allows proper multiplexing of several resources on one connection:

An important consequence of HTTP/2’s approach is that we suddenly also need a way for the browser to communicate to the server how it would like the single connection’s bandwidth to be distributed across resources. Put differently: how resource chunks should be “scheduled” or interleaved. If we again visualize this with 1’s and 2’s, we see that for HTTP/1.1, the only option was 11112222 (let’s call that sequential). HTTP/2 however has a lot more freedom:

- Fair multiplexing (for example two progressive JPEGs):

12121212 - Weighted multiplexing (2 is twice as important as 1):

221221221 - Reversed sequential scheduling (for example 2 is a key Server Pushed resource):

22221111 - Partial scheduling (stream 1 is aborted and not sent in full):

112222

TCP HOL blocking

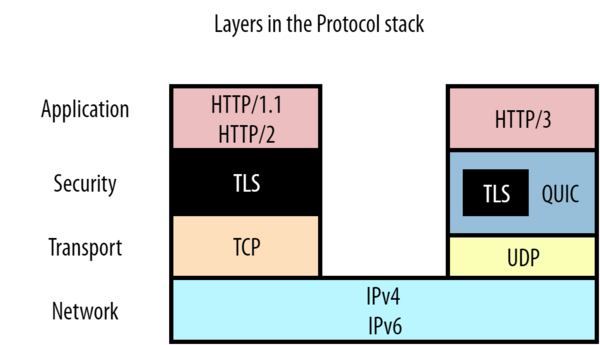

HTTP/2 only solved HOL blocking at the HTTP level, what we might call “Application Layer” HOL blocking. There are however other layers below that to consider in the typical networking model.

HTTP/2 sees multiple, independent resource bytestreams, but TCP sees just a single, opaque bytestream.

what should happen if TCP packet 2 is lost in the network, but somehow packet 1 and packet 3 do arrive? Remember that TCP doesn’t know it’s carrying HTTP/2, just that it needs to deliver data in-order. As such, it knows the contents of packet 1 are safe to use and passes those to the browser. However, it sees that there is a gap between the bytes in packet 1 and those in packet 3 (where packet 2 fits), and thus cannot yet pass packet 3 to the browser. TCP keeps packet 3 in its receive buffer until it receives the retransmitted copy of packet 2 (which takes at least 1 round-trip to the server), after which it can pass both to the browser in the correct order. Put differently: the lost packet 2 is HOL blocking packet 3!

Another example is the situation where packet 1 is lost, but 2 and 3 are received. TCP will again hold back both packets 2 and 3, waiting for 1. However, we can see that at the HTTP/2 level, the data for stream 2 (the CSS file) is present completely in packets 2 and 3 and doesn’t have to wait for packet 1’s retransmit. The browser could have perfectly parsed/processed/used the CSS file, but is stuck waiting for the JS file’s retransmit.

In conclusion, the fact that TCP does not know about HTTP/2’s independent streams means that TCP-Layer HOL blocking (due to lost or delayed packets) also ends up HOL blocking HTTP!

HOL blocking in HTTP/3 over QUIC

Let’s summarize what we’ve learned so far:

- HTTP/1.1 had HOL blocking because it needs to send its responses in full and cannot multiplex them

- HTTP/2 solves that by introducing “frames” that indicate to which “stream” each resource chunk belongs

- TCP however does not know about these individual “streams” and just sees everything as 1 big stream

- If a TCP packet is lost, all later packets need to wait for its retransmission, even if they contain unrelated data from different streams. TCP has Transport Layer HOL blocking.

QUIC was inspired by HTTP/2’s framing-approach and also adds its own frames; in this case the STREAM frame. The stream id, which was previously in HTTP/2’s DATA frame, is now moved down to the Transport Layer in QUIC’s STREAM frame.